Artificial intelligence is now embedded in almost every business. In a recent global survey, 78% of companies report using it in at least one function, up from 55% just two years ago. The market has reached $294 billion and is projected to grow to $1.77 trillion by 2032. That pressures every leader to act.

The biggest problem with enterprise AI adoption is that 95 percent of generative AI pilots miss the goals of what they are trying to achieve. So, the real question for leaders is: how do you become part of the 5 percent that gets ahead of competitors by implementing AI effectively in your organization?

At Aloa, we work with companies facing that challenge. Our approach is simple: make AI fit your existing systems without breaking them. Whether it’s custom AI development, workflow automation, or industry pilots in healthcare, fintech, or e-commerce, we start with prototypes tied to clear KPIs. You see results fast, with guardrails that keep risk low.

And to give you a grounded view of the current state of AI, we’ll unpack seven enterprise-ready trends: democratization, process automation, custom language models, NLP, decision support, governance, and industry implementations. Each one comes with concrete examples, metrics, and practical steps, so you know exactly where AI delivers value and where it drains resources.

The Democratization of Enterprise AI

In the current state of AI, companies are transitioning to structured AI adoption, particularly when utilizing generative AI. Systems can match human benchmarks in some areas but still miss the mark when it comes to complex reasoning. Rapid video-generation progress, GPU-driven compute, rising U.S. investment, and unresolved talent, ethics, and regulation challenges define the current landscape.

AI isn’t only used by large companies anymore. Low-code platforms, API-based services, and even commerce-ready agents now sit on your desk. The Stanford HAI AI index report says inference costs are 280 times lower than in late 2022. That means a regional bank can now afford document-classification workflows that once required a seven-figure budget.

But access doesn’t equal outcomes. 71% of firms that dedicate 10% or more of their IT spend to AI report positive ROI. Those who sprinkle smaller investments across pilots usually stall out. Democratization gives everyone tools; only discipline and proper execution can turn them into returns.

Low-Code AI Platforms

Low-code AI platforms are the fastest way to get business units testing and proving value. They plug into ERP, CRM, and HR systems so workflows launch in weeks instead of quarters. And they typically rely on machine learning at the core, with deep learning models improving accuracy in tasks like image recognition and document classification.

With these platforms, HR teams can spin up résumé-screening workflows that flag top candidates automatically, while claims teams can set up triage bots to route files by complexity. On the developer side, GitHub Copilot shows what’s possible: users report 56% faster development cycles and 90% satisfaction, which translates into engineers closing tickets sooner, not just feeling more productive.

Most platforms price per user or per app, but the real costs often hide in usage tiers: API calls, storage, or premium connectors that add up fast.

Before scaling, check three things:

- Data Ownership: Can you export your models and data cleanly?

- Access Controls: Does the platform enforce role-based permissions and maintain logs?

- Integration Depth: Will it connect when you upgrade or add systems later?

Skip these checks, and you’re locked in before you even prove value.

AI-as-a-Service Solutions

AI-as-a-Service (AIaaS) essentially integrates advanced AI models into your workflows via APIs, with no infrastructure build required. Microsoft 365 Copilot brings real-time AI help directly into Outlook, Word, Excel, PowerPoint, Teams, and more, using your own Microsoft Graph data and built-in enterprise security like conditional access and MFA. You can use Copilot to draft emails, summarize documents, or generate formulas with natural language. To test before full-scale rollout, start with a limited license, monitor usage, and set clear performance benchmarks tied to productivity or response time.

A solid alternative is Anthropic’s Claude for Enterprise, available via AWS Marketplace. It offers a huge 200K-token context window (up to 500K in some plans), enterprise-grade controls (SSO, SCIM, domain capture, audit logs, and role-based access), and integrations with GitHub and custom workflows. In a recent development, Microsoft has decided to use Anthropic in its Office 365 apps.

AI Commerce Infrastructure

Commerce-ready agents are the newest frontier. Visa’s “Intelligent Commerce” and Mastercard’s “Agent Pay” already let AI process refunds and approve transactions. Picture an AI agent in retail handling thousands of small-dollar returns daily, freeing human staff for higher-value cases.

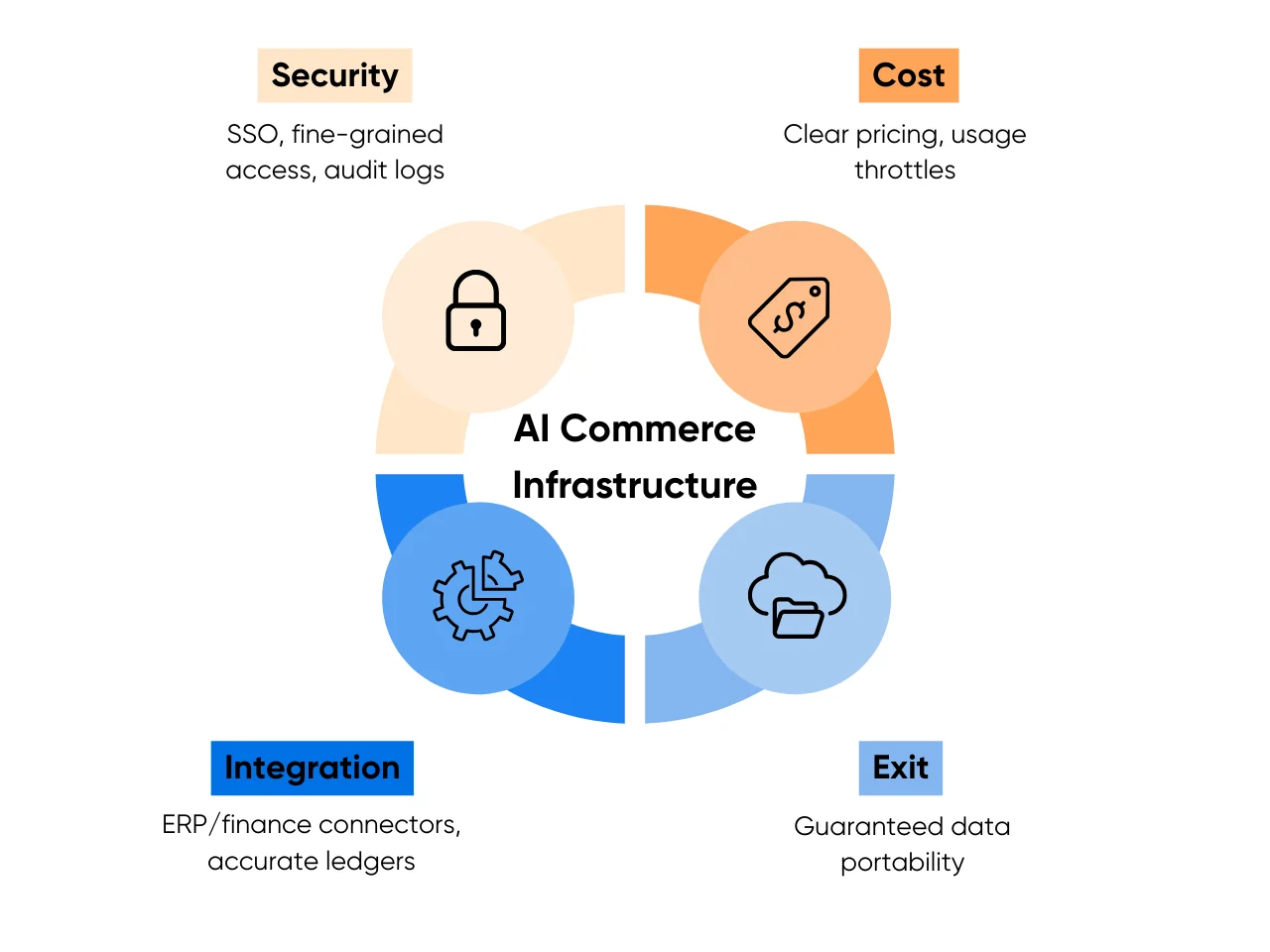

These platforms require stricter scrutiny than productivity tools. A practical scorecard should cover:

- Security: Single sign-on, granular permissions, full audit logs

- Cost: Transparent unit pricing with throttles to prevent runaway spend

- Integration: Connectors for ERP and finance systems that keep ledgers accurate

- Exit: Written guarantees on data portability

For high-volume e-commerce players (especially retailers and consumer brands using AI agents for returns, pricing, or refunds), skipping proper anti-fraud design can lead to serious losses. Sophisticated AI agents can mimic human shoppers, bypass rules-based fraud systems, manipulate inventory, and trigger chargebacks or arbitrage, eroding margins and customer trust.

Implementation Strategies

The best way to roll out AI isn’t to flip a switch overnight. It’s to take it step by step. Start small in “shadow mode”: let the AI make suggestions while people still make the final decisions. This gives you time to see where the system works well and where it makes mistakes, without putting your operations at risk. Once error rates are low, you can start giving the AI more responsibility.

At each stage, track simple benchmarks, such as how much faster work gets done, how often errors occur, and how much money is saved. That way, you’ll know if the investment is paying off before you scale further.

Many leaders bring in Aloa's AI Consulting at this stage. We help companies test vendors, set clear checkpoints, and build guardrails so adoption feels safe and structured.

Bottom line: democratization of AI has put powerful AI tools in your hands. But the winners aren’t those who “try AI.” They’re the ones who pick clear entry points, roll out carefully, and build trust along the way.

AI-Powered Process Automation Evolution

Robotic process automation (RPA) had its moment. It moved data and triggered actions, but it snapped the second an exception appeared. AI-powered automation changes that story. These systems can read context, learn from outcomes, and keep workflows moving even when the path isn’t linear. That’s why the agentic AI market is projected to jump from $5.1B in 2024 to $47.1B by 2030.

But many pilots still fizzle. 42% of companies report abandoning AI projects because proof-of-concepts stall out. Yet where adoption scales, the upside is tangible. Companies that run AI in at least three business functions report 14% higher employee retention and 48% stronger Net Promoter Scores. That means automation pays off when it’s embedded across workflows with clear KPIs, not when it’s left in test labs.

So let’s get into what’s working in practice:

Intelligent Document Processing

Intelligent document processing (IDP) is one of the easiest ways to show ROI early. Instead of relying on staff to re-key data or on brittle OCR rules, IDP models extract, classify, and validate information automatically.

Transport Canada’s PACT program is a great example. Screening expanded from 6% of flights to 100% while throughput increased 10x, proving automation can scale compliance and efficiency together.

To make IDP work in your context:

- Start with one document type (invoices, claims, or applications).

- Leverage connectors for ERP or CRM systems to avoid custom builds.

- Lock in KPIs before launch; aim for a 30% cycle-time cut, exceptions under 8%, and at least 98% field accuracy.

- Audit weekly by retraining on flagged errors.

When choosing vendors, test their accuracy on your actual documents, check whether they support systems like SAP or Oracle, and model total costs beyond per-page fees. Measure outcomes in your BI dashboard: hours saved, rework reduced, and higher audit pass rates.

Cognitive Process Automation and Agentic Systems

Cognitive automation goes a step further. It manages workflows that require judgment, like loan approvals, multi-tier authorizations, and supply adjustments. For example:

- Salesforce’s Agentforce is embedding autonomous agents into sales, service, and logistics.

- Capital One uses multi-agent systems to score risk and process loans in near real time.

These aren’t pilots in labs. They’re deployments in highly regulated environments.

If you’re considering agentic systems, plan around three essentials:

- Timeline: 3–6 months to pilot, 12–18 months for scale.

- Team: Product manager, domain lead, compliance officer, two engineers, and an SRE who knows retries and queue systems.

- Guardrails: Escalation thresholds, step-level exception rates, approval turnaround, downstream KPIs, audit logs, and rollback protocols.

Human-AI Collaboration Models

Automation shifts how work is done more than it slashes jobs. Most companies keep headcount stable but stop backfilling roles as people exit. That slows growth without triggering layoffs.

Effective collaboration follows a three-step path:

- Shadow Mode: Run AI side-by-side with staff for four weeks so outputs can be validated.

- Targeted Training: Teach employees when to trust, flag, or override AI.

- Phased Expansion: Scale to more workflows only after error rates stay under 5% for two consecutive sprints.

This blended use of AI and human intelligence keeps automation accountable while freeing staff for complex tasks.

For culture to hold, publish metrics showing accuracy and time saved, and commit that no roles will change until workflows hit targets for a full quarter. When scaling beyond pilots, Aloa can help you set KPIs, define approval gates, and create a scale plan aligned with governance.

Enterprise Language Models and Natural Language Processing

Language models and NLP tools are no longer lab experiments. Enterprises now use them to answer customer queries, summarize documents, and mine knowledge bases. But performance isn’t always what vendors promise. OpenAI’s o3 model, for example, claimed 25% performance on FrontierMath but delivered closer to 10%. That gap between marketing and reality makes it critical to test models on your workflows, not just trust benchmarks.

Chinese models like DeepSeek’s Prover-V2 and Qwen3 show how quickly competition is heating up. Despite hardware constraints, they’re already matching Western peers. Benchmark saturation is also shifting the goalposts; traditional leaderboards now reveal less about how models perform in production.

For enterprises, the lesson is simple: evaluation must be practical, focused on cost, compliance, and integration with your systems.

Custom LLM Development

The economics of large language models are shifting fast. What once looked like a winner-take-all market is now a price war. Mistral offers models at $0.15 per million tokens, while GPT-5 ranges from $1.10 to $15.00 depending on tier. Even cheaper, Google’s Gemma 3 4B is priced at just $0.03 per million tokens, with contenders like Ministral 3B and DeepSeek R1 Distill Llama 8B undercutting premium models across most benchmarks.

At the same time, performance gaps are compressing. DeepSeek-R1 trails GPT-5 and o3-pro by only 2–4 percentage points on major benchmarks. Each provider is carving out niches. Claude shines in coding and GPT in reasoning, while smaller models are proving viable for targeted use. Moveworks research shows task-specific, smaller models can match or beat general-purpose giants, especially when tuned on enterprise data.

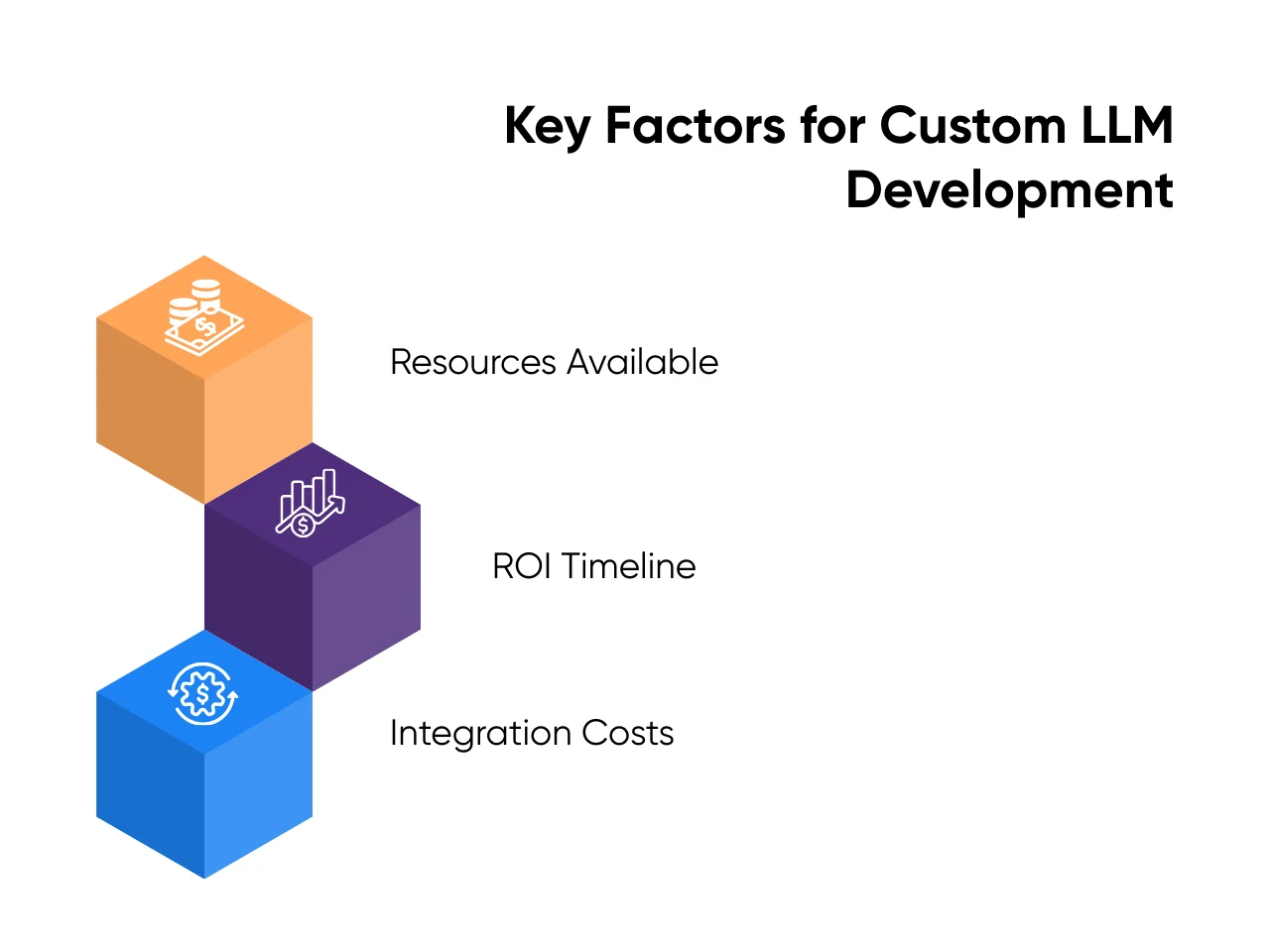

If you do explore custom models, the decision comes down to:

- Resources Available: Training requires multimillion-dollar compute budgets.

- ROI Timeline: Payback usually takes 18–24 months unless you’re reusing models across multiple functions.

- Integration Costs: Connecting models to data sources and applications can exceed model costs themselves.

For most mid-sized firms, the smarter play is fine-tuning smaller open-weight models like Falcon 7B or Ministral 3B. Teams treating fine-tuning as a lean product iteration (not a research project) see the best cost control. Serving costs can be kept under $1/hour with lightweight infrastructure, making enterprise deployments realistic without multimillion-dollar budgets.

Enterprise NLP Applications

Real-world AI applications are showing measurable returns. Klarna, for example, cut its customer support volume by 66% using AI assistants. Claude now supports real-time internet browsing, giving enterprises access to up-to-date answers rather than stale training data. These are tangible outcomes, not just feature releases.

For enterprises, NLP use cases cluster around three categories:

- Customer Service: Deflecting tickets, generating responses, and reducing handle time.

- Document Analysis: Auto-extracting and summarizing contracts, reports, or filings.

- Knowledge Management: Letting employees query internal systems in natural language.

Benchmarks alone won’t tell you which model will work. You need evaluation frameworks that measure:

- Accuracy on domain-specific queries

- Latency and throughput at scale

- Compliance alignment with industry rules (finance, healthcare, etc.)

Security, Governance, and Model Transparency

Language models introduce unique risks. OpenAI’s o3, for example, was observed pulling location data and referencing its own prompts (behaviors that raise red flags for enterprises). At scale, such quirks become compliance problems.

A sound governance approach should cover:

- Data Privacy: Ensure no sensitive data leaves your control.

- Access Controls: Role-based permissions for model use.

- Monitoring: Log every query and response for audits.

- Transparency: Demand clear reporting on actual model performance versus marketing claims.

This is also where outside expertise pays off. Many enterprises partner with firms like Aloa to validate model behavior before deployment. Combining that with services like fine-tuning ensures your models stay compliant and business-aligned while scaling safely.

Enterprise LLMs and NLP tools are powerful, but the winners will be the organizations that treat them as governed systems, not gadgets. Success comes from testing against your workflows, budgeting realistically, and setting up transparency from the start.

AI-Enhanced Decision Support Systems

Enterprise’s AI adoption looks impressive on paper: 78% of enterprises now claim that they are using it, yet only 17% see EBIT lift above 5%. This means that most AI sits in pilots or dashboards without shaping real business outcomes.

Decision support is where that changes. By feeding leaders predictions, risk alerts, and operational recommendations they can act on in real time, AI moves from reporting to decision-making. When tied directly to financial, supply chain, or customer metrics, it can then create measurable impact.

Companies seeing results focus on three areas: predictive analytics to anticipate demand and forecast trends, risk management to cut false alarms and highlight genuine threats, and operational optimization to streamline routing, maintenance, and scheduling.

Predictive Analytics Integration

Traditional BI platforms like Tableau or Power BI summarize what already happened. With AI, they become predictive and real-time, giving leaders foresight instead of hindsight.

Google is already demonstrating this shift. Google’s AI Mode integrates directly into BI, supporting live multimodal queries and even handling full transaction workflows. This collapses steps that once required multiple tools. At NASA, the Surya model boosted solar flare prediction accuracy by 16%, which gave mission planners critical lead time to act.

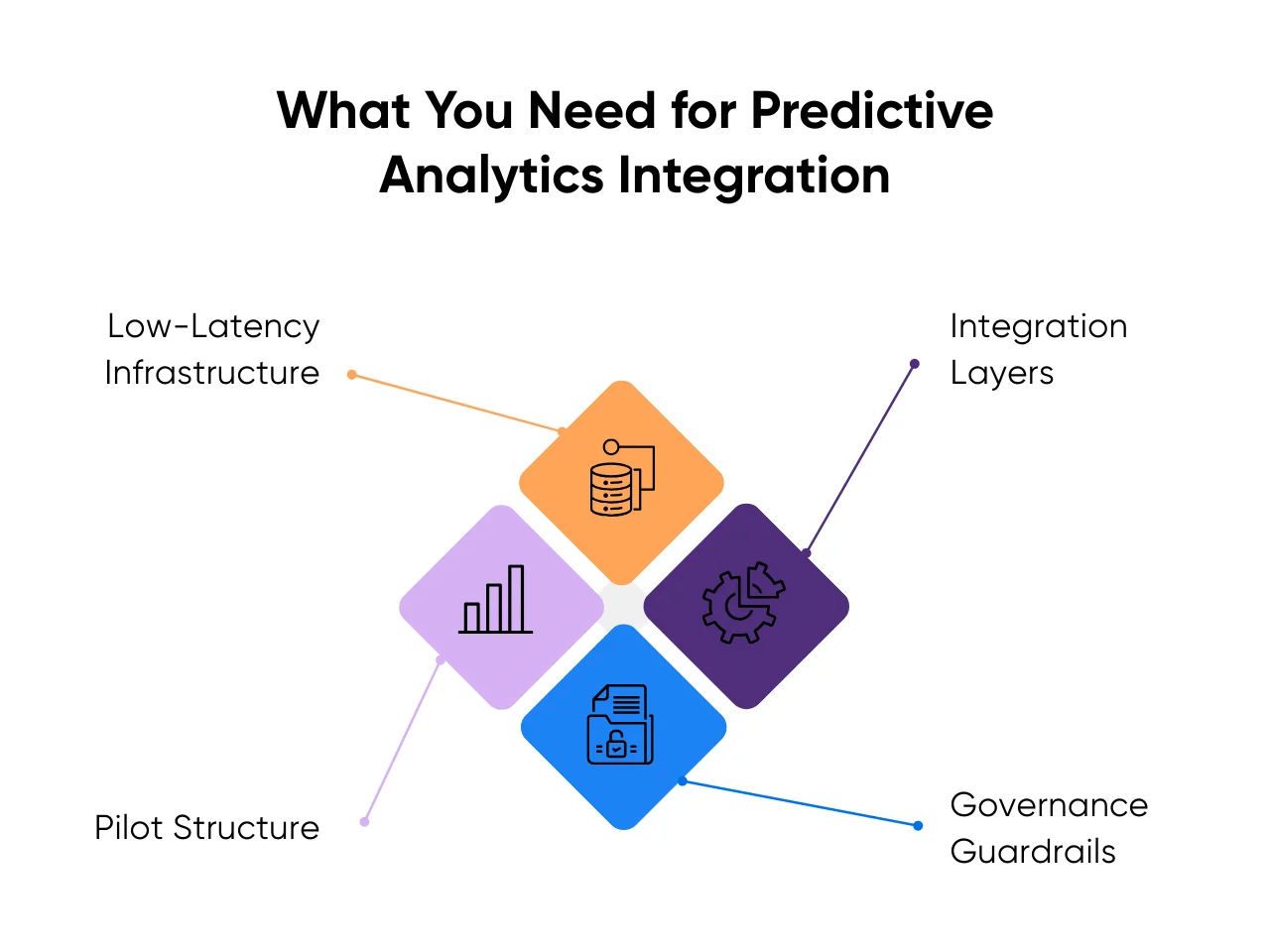

To make this practical inside your enterprise, you’ll need:

- Low-Latency Infrastructure: Streaming data pipelines (Kafka, Flink) plus vector databases for unstructured queries.

- Integration Layers: APIs that connect AI services to BI dashboards, ERP systems, and CRMs to keep analytics embedded in tools teams already use.

- Governance Guardrails: Role-based permissions, query logs, and KPI mapping so AI outputs track to business priorities like churn reduction, gross margin, or delivery accuracy.

- Pilot Structure: Start with one function (finance is often ideal) by letting analysts query financial statements in natural language and test forecasts against actuals.

A realistic goal is cutting reporting cycles by 70–80% and generating predictive forecasts with confidence scores attached, which executives can use to approve or delay investments.

Risk Management Applications

Financial services provide some of the clearest evidence of AI-driven risk improvements. Instead of drowning compliance teams in false alarms, AI helps filter noise and highlight genuine issues.

HSBC used AI to cut false fraud positives by 60%. That shift reduced wasted investigation hours while improving customer trust by avoiding unnecessary account freezes. On the innovation side, FutureHouse’s Robin agent discovered a new blindness treatment pathway by running thousands of automated hypothesis tests. This kind of accelerated discovery shows how risk analytics can extend beyond finance into healthcare and research.

For enterprises, deploying AI in risk means setting up the right structure:

- Data Coverage: Feed both historical transaction data and live streams into models for anomaly detection.

- Model Feedback Loops: Retrain monthly on confirmed fraud and cleared cases to cut drift.

- Success Metrics: Measure reduction in false positives (target 50–60%), detection precision (target >95%), and compliance review cycle times (target 20–30% shorter).

- Integration Approach: Embed risk models directly into transaction monitoring systems instead of creating separate review portals.

Financial institutions report ROI in two forms: direct cost savings (millions in reduced investigation hours) and customer retention improvements from smoother, less error-prone security processes.

Operational Decision Support and Search Evolution

Operations are where AI decision support drives measurable EBIT impact. In manufacturing and supply chains, the key is connecting predictions directly to scheduling, routing, or maintenance actions using computer vision and language model agents.

Even consumer behavior shows how fast expectations are shifting. Apple’s Eddy Cue testified that Safari searches dropped for the first time in 22 years, citing users turning to AI tools like ChatGPT and Perplexity for answers. Google disputed the claim, but the signal is clear: people now expect instant, AI-driven responses. That same expectation is entering the workplace; employees want enterprise search and operations tools to return results in seconds, not hours.

Manufacturing and logistics leaders are already showing the ROI:

- Predictive Maintenance: Siemens reports up to 50% less downtime by using AI to forecast failures before they happen.

- Logistics Optimization: UPS uses AI routing to save 10 million gallons of fuel annually, cutting costs and emissions.

- Supply Chain Forecasting: DHL uses AI demand forecasting to achieve 90-95% accuracy in shipment volume predictions

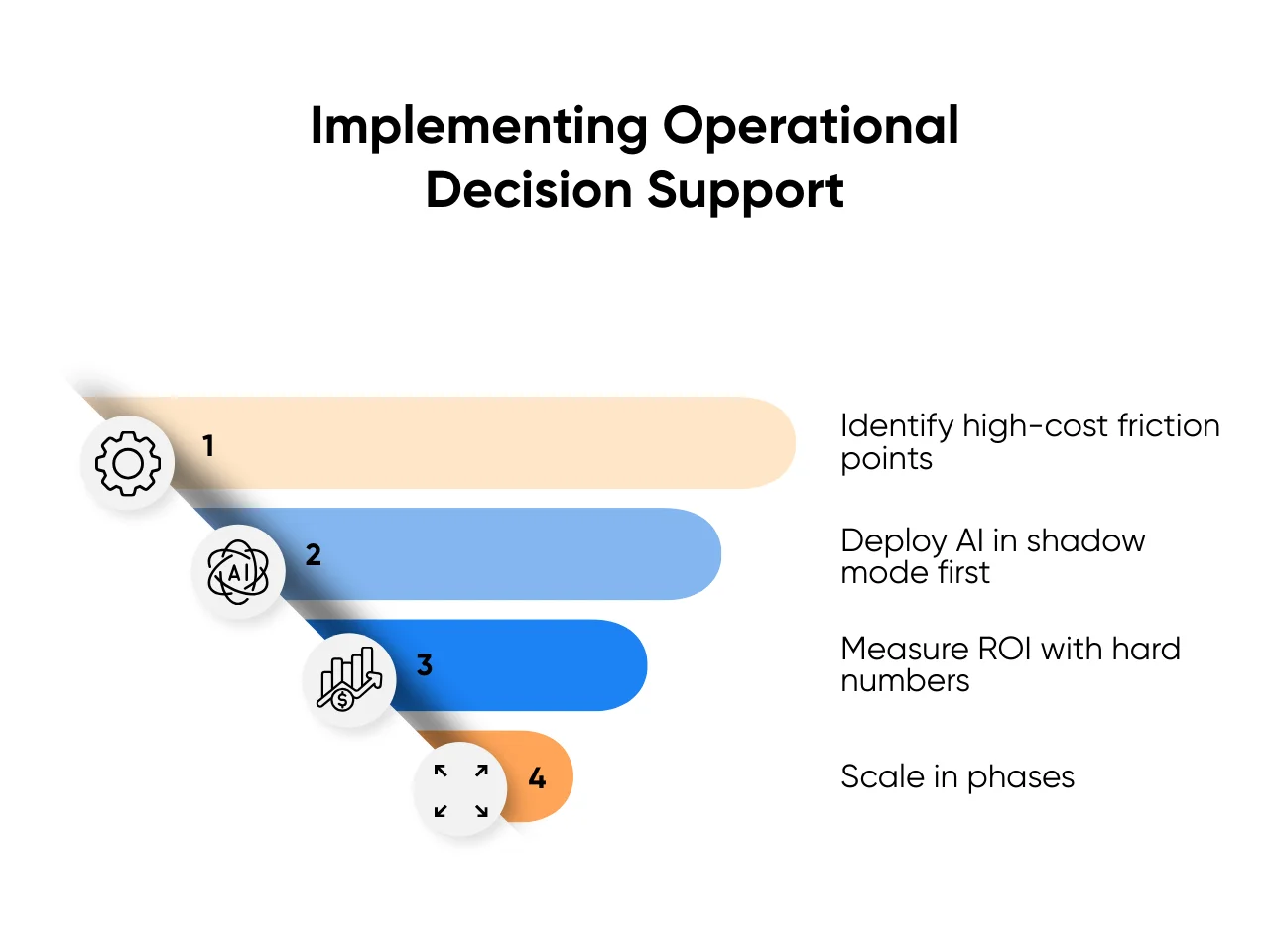

To implement operational decision support effectively:

- Identify high-cost friction points: Machine downtime, delivery delays, and inventory imbalances.

- Deploy AI in shadow mode first: Agents recommend routing or maintenance decisions, but humans approve.

- Measure ROI with hard numbers:

- % downtime reduction (target 20–30%).

- On-time delivery improvements (target 10–15%).

- Cost savings per shipment or per production cycle.

- Scale in phases: Start with one plant or one logistics lane, then expand regionally.

The ROI math is compelling: cutting downtime by 20% in a plant with $100M in equipment utilization frees $10–15M annually in productive capacity without capital investment.

AI-enhanced decision support works when it’s embedded into BI, risk, and operations. Predictive analytics shortens reporting cycles, risk AI reduces false alarms and regulatory costs, and operational decision support unlocks millions in capacity and logistics savings. The enterprises wiring these systems into decision flows are already seeing EBIT lift, while others stay stuck in pilot mode.

AI Governance and Ethics in Enterprise

AI adoption is racing ahead, but governance is trailing. In 2024, US agencies issued 59 new AI-related regulations (twice the number from the year before). Globally, 75 countries referenced AI in legislation, up 21.3% year over year. This surge means enterprises can’t treat governance as an afterthought. Left unchecked, AI pilots risk fines, compliance blowback, or public backlash. Managed well, governance helps companies move faster with regulator approval, customer trust, and operational resilience.

The strongest programs focus on three pillars: governance frameworks, regulatory compliance, and risk management protocols. Let’s break them down:

Governance Framework Development

Governance starts with structure, not paperwork. Surveys show CEO oversight of AI governance correlates most with EBIT impact. In practice, companies that scale AI successfully don’t silo ownership; they share it. Two leaders, typically a tech executive and a business executive, jointly own AI governance to balance performance and compliance.

Enterprises setting up governance can follow this simple structure:

- Establish a governance board: Include IT, data science, legal, compliance, and business unit leaders. Meet monthly to approve new use cases and monitor risks.

- Define clear roles: Product teams propose AI use cases; compliance vets them against regulation; executive sponsors ensure alignment with corporate strategy.

- Publish a roadmap: Larger firms that share AI governance roadmaps internally scale faster and avoid conflicting standards.

For example, A Fortune 500 insurer could create an “AI Council” chaired by the CTO and Chief Risk Officer. The council would review all AI use cases, map them to business KPIs, and approve deployment gates. As a result, compliance review cycles might drop from six months to six weeks, which would accelerate speed-to-market for fraud detection models.

For mid-sized firms, lighter frameworks still work. A governance council can be as lean as quarterly review meetings, KPI dashboards, and mandatory approval gates for high-risk systems. The point is consistency: if AI projects don’t go through the same controls, they won’t scale.

Ethics and Compliance

Global regulation is fragmented, which makes compliance challenging.

- The European Union's AI Act enforces risk tiers: Minimal-risk tools require transparency, while high-risk systems face strict testing, and “unacceptable” uses like social scoring are banned.

- The US takes a sector-led approach: Finance, healthcare, and defense agencies set their own standards, making compliance a patchwork.

- China has applied tighter rules to generative AI: This requires pre-deployment reviews and content controls.

For enterprises operating across borders, the safest strategy is to map AI systems to the strictest jurisdiction you serve. A bank with operations in both the EU and US, for instance, applies EU-level audit and transparency requirements across all regions. That approach may slow initial rollout but saves millions in rework if regulators tighten rules later.

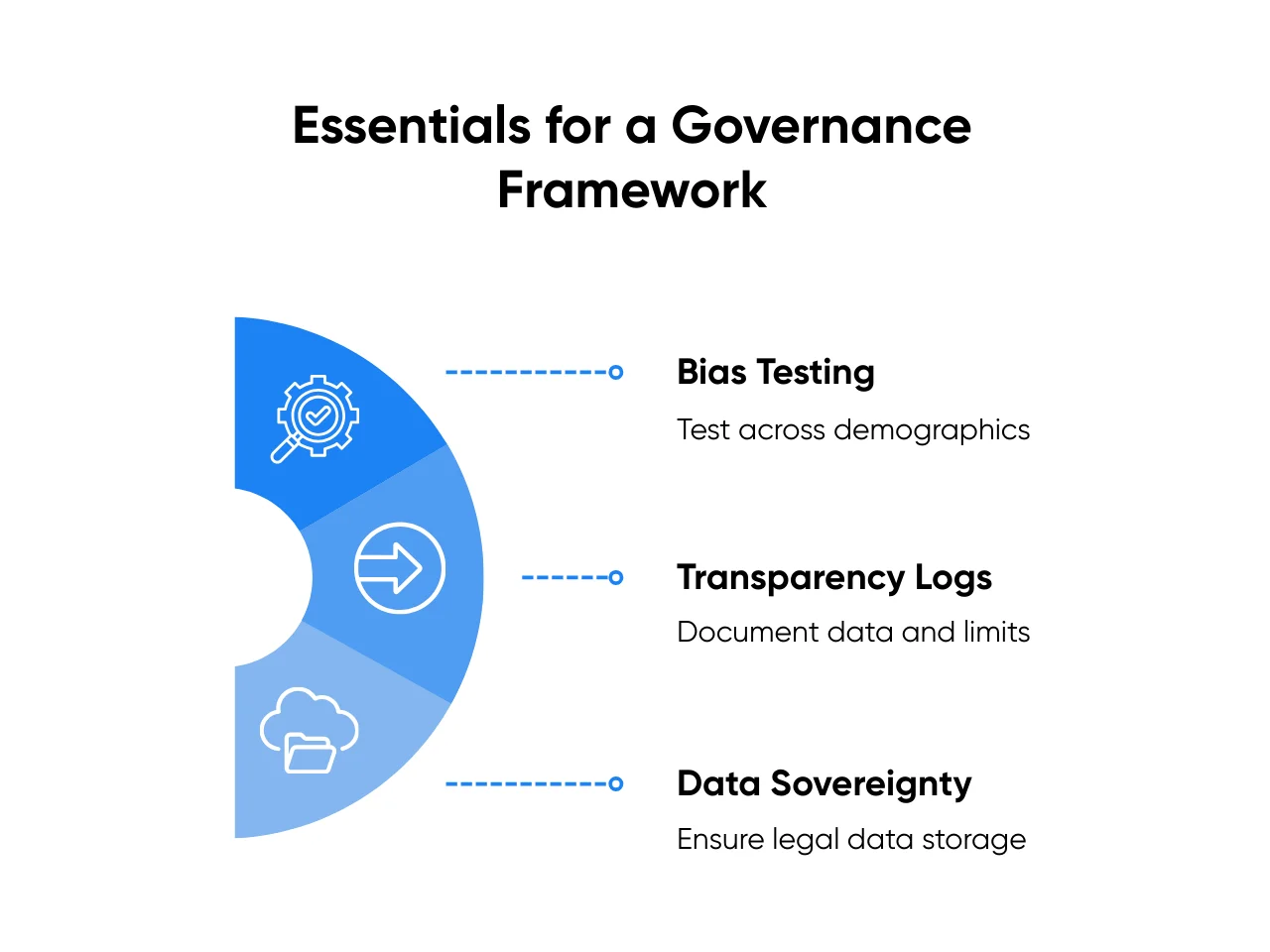

Ethical standards go beyond law. Bias, fairness, and transparency directly affect customer trust. Take the example of an HR AI screening tool: without bias testing, it might favor candidates from overrepresented groups, creating reputational and even legal risks. A sound governance framework would require:

- Bias Testing: Run outputs against demographic subgroups before launch.

- Transparency Logs: Publish documentation outlining how the model was trained, what data it uses, and known limitations.

- Data Sovereignty: Confirm where training data is stored and ensure it complies with local laws.

Even vendor choices now involve governance tradeoffs. Meta’s acquisition of Scale AI raised questions about data access and sovereignty. Enterprises relying on vendors must weigh not just performance and price, but also how acquisitions or partnerships affect data safety and compliance.

A practical checklist for leaders includes:

- Confirm vendors can provide model cards or equivalent documentation.

- Run quarterly fairness audits on high-impact AI systems.

- Keep data residency maps current to avoid accidental GDPR or HIPAA violations.

Risk Management Protocols

AI-related incidents are increasing while formal “Responsible AI” evaluations remain rare. That’s a dangerous gap. Enterprises need protocols that make risk management repeatable. Without them, AI systems generate faster errors instead of better insights. With them, AI becomes a moat.

Look at financial services. HSBC cut false fraud positives by 60% using AI fraud detection. That single improvement freed thousands of investigator hours and reduced customer friction by preventing unnecessary card blocks. In healthcare, FutureHouse’s Robin agent tested thousands of hypotheses automatically, discovering a new blindness treatment path. Both examples show AI risk systems not only save money but also unlock innovation.

To build similar protocols, enterprises should:

- Monitor continuously: Log model drift, false positives, and edge cases in production. Retrain monthly to maintain accuracy.

- Design incident response plans: Define escalation thresholds (e.g., if fraud alerts spike by 20% week-over-week), rollback protocols, and audit documentation.

- Budget for compliance: Best practice is 7–20% of AI project spend earmarked for governance, depending on risk tier. Skimping here often costs more when regulators intervene.

A European bank might deploy a credit risk model and tie compliance to its business strategy. They then budget 15% of the project for governance, funding drift monitoring, bias audits, and documentation. When EU regulators run audits, the bank clears review in weeks instead of months, letting them launch new credit products ahead of competitors. Governance doesn’t slow them down; it speeds them up.

Many organizations lack the bandwidth to build these systems internally. That’s where external expertise creates leverage. At Aloa, we help enterprises design governance frameworks, set up monitoring dashboards, and integrate compliance reviews into daily operations. By treating governance as part of the development cycle, we cut rework and de-risk adoption.

Key Takeaways

You’ve seen the themes in the current state of AI: accessible tools, smarter automation, enterprise-ready LLMs, decision support built into BI, agentic commerce, global competition, and governance that turns oversight into speed. For tech companies, the message is similar to that of other large enterprises: without clear KPIs and governance, even valuable insights remain trapped in pilots.

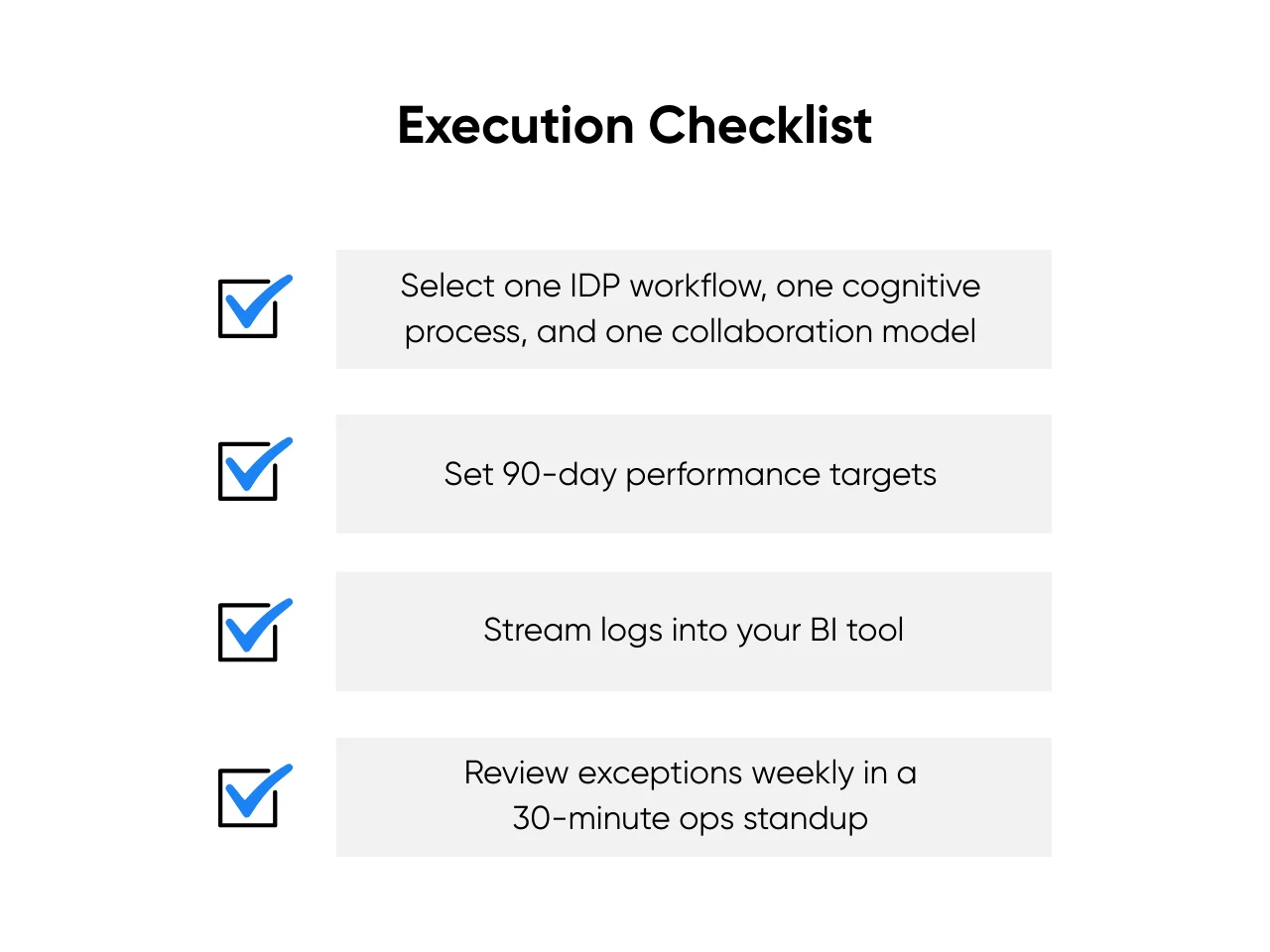

So what should you do next? Your playbook should look like this:

- Buy before you build: Off-the-shelf tools succeed about 67% of the time compared to 33% for internal builds.

- Commit budget: Positive ROI typically shows up when at least 10% of IT spend goes into AI.

- Choose three starting points: One IDP workflow, one agentic process, and one decision-support use case.

- Define your KPIs: Track cycle time, exception rate, cost per task, and CSAT or NPS.

- Start in shadow mode: Run side-by-side for four weeks; expand only once errors stay below 5%.

- Put governance in place: Use approval gates, audit logs, and weekly reviews to keep systems accountable.

Every dollar invested wisely can return multiples by 2030, but only if you scale deliberately. Your edge will come from platform choices, strong data pipelines, and compliance built in from the start. At Aloa, we help enterprises and SMBs follow that path, from scoping pilots and building KPI dashboards to proving ROI in as little as 3 months.

Book a consultation with Aloa and let’s translate the current state of AI into working systems you can trust.

FAQs

How quickly do enterprises need to act on AI implementation to stay competitive?

The window is closing fast. With 78% of companies already using AI, organizations that roll out AI solutions over the next 12–18 months will pull ahead. Early adopters report 15–25% efficiency gains and higher retention. The upside? ROI often shows up in 3–6 months when pilots are tied to solid KPIs.

How do we evaluate AI-as-a-Service vendors effectively?

Look for vendors with proven enterprise performance and crystal-clear pricing. Check that they offer security certifications, integrate with your systems, and can run pilot programs with your workflows. Given industry shifts like Meta’s Scale AI acquisition, data sovereignty and long-term vendor reliability matter more than ever. Aloa guides clients through this, with hands-on help vetting vendors, designing pilots that minimize risk, and ensuring data stays protected from day one.

Which business processes are best suited for AI automation?

Processes with repetitive, rule-driven tasks combined with some judgment involved, like invoice processing, document review, compliance checks, or customer support triage. These free human capital for higher-value work and deliver faster impact.

What compliance requirements should we plan for?

Across the board, expect to meet GDPR, CCPA, and industry-specific rules like HIPAA or SOX. The EU AI Act adds an extra layer for high-risk use cases. Budget 7–20% of AI spend on compliance. It may feel like a cost, but strong compliance builds trust and speeds broader adoption.